nvidia Multiple Process Service (MPS)

What MPS is?MPS is a binary-compatible client-server runtime implementation of the CUDA API which consists of several components.Control Daemon Process – The control daemon is responsible for starting

What MPS is?

When to use MPS? (The Benefits of MPS)

GPU utilization

A single process may not utilize all the compute and memory-bandwidth capacity available on the GPU. MPS allows kernel and memcopy operations from different processes to overlap on the GPU, achieving higher utilization and shorter running times.

Reduced on-GPU context storage

Without MPS each CUDA processes using a GPU allocates separate storage and scheduling resources on the GPU. In contrast, the MPS server allocates one copy of GPU storage and scheduling resources shared by all its clients. Volta MPS supports increased isolation between MPS clients, so the resource reduction is to a much lesser degree.

Reduced GPU context switching

Without MPS, when processes share the GPU their scheduling resources must be swapped on and off the GPU. The MPS server shares one set of scheduling resources between all of its clients, eliminating the overhead of swapping when the GPU is scheduling between those clients.

(没有换入换出就意味着所有进程的相关内容(包括什么呀)需要常驻显存,会不会有很大的占用开销?)

Identifying Candidate applications

MPS is useful when each application process does not generate enough work to saturate the GPU. Multiple processes can be run per node using MPS to enable more concurrency. Applications like this are identified by having a small number of blocks-per-grid.

Further, if the application shows a low GPU occupancy because of a small number of threads-per-grid, performance improvements may be achievable with MPS.Using fewer blocks-per-grid in the kernel invocation and more threads-per-block to increase the occupancy per block is recommended. MPS allows the leftover GPU capacity to be occupied with CUDA kernels running from other processes.

问:如果kernel的blocks-per-grid小且threads-per-block多,比较适合使用MPS( Tensorflow有没有提供控制grid,block,thread的接口)

答:The optimal launch configuration will depend on the specifics of the compute kernel. As such, there is no single value. You’ll need to identify a particular operation of interest to investigate. As far as the sources, some TF native kernels will use functions from tensorflow/core/util/gpu_launch_config.h when determining their launch configuration. For XLA, you might start in tensorflow/compiler/xla/service/gpu/partition_assignment.h.

Another approach is to run your network through the nvprof or nsight systems profilers. In the timeline view, the kernel properties will show the actual launch configuration used.

Using Nsight Compute to Inspect your Kernels | NVIDIA Technical Blog

These cases arise in strong-scaling situations, where the compute capacity (node, CPU core and/or GPU count) is increased while the problem size is held fixed. Though the total amount of computation work stays the same, the work per process decreases and may underutilize the available compute capacity while the application is running. With MPS, the GPU will allow kernel launches from different processes to run concurrently and remove an unnecessary point of serialization from the computation

MPS limitations

- MPS is only supported on the Linux operating system. The MPS server will fail to start when launched on an operating system other than Linux.

- MPS is not supported on Tegra platforms. The MPS server will fail to start when launched on Tegra platforms.

- MPS requires a GPU with compute capability version 3.5 or higher. The MPS server will fail to start if one of the GPUs visible after applying CUDA_VISIBLE_DEVICES is not of compute capability 3.5 or higher. CUDA Compatibility :: NVIDIA Data Center GPU Driver Documentation

- The Unified Virtual Addressing (UVA) feature of CUDA must be available, which is the default for any 64-bit CUDA program running on a GPU with compute capability version 2.0 or higher. If UVA is unavailable, the MPS server will fail to start.

- The amount of page-locked host memory that can be allocated by MPS clients is limited by the size of the tmpfs filesystem (/dev/shm).

- Exclusive-mode restrictions are applied to the MPS server, not MPS clients.

- Only one user on a system may have an active MPS server.

- The MPS control daemon will queue MPS server activation requests from separate users, leading to serialized exclusive access of the GPU between users regardless of GPU exclusivity settings.(不同user的MPS server激活请求会被queue,因此只有当队前用户MPS server彻底执行完成,下一个用户的MPS server才能启动)

- All MPS client behavior will be attributed to the MPS server process by system monitoring and accounting tools (e.g. nvidia-smi, NVML API)

GPU Compute Modes

Three Compute Modes are supported via settings accessible in nvidia-smi.

PROHIBITED – the GPU is not available for compute applications.

EXCLUSIVE_PROCESS – the GPU is assigned to only one process at a time, and individual process threads may submit work to the GPU concurrently.

DEFAULT – multiple processes can use the GPU simultaneously. Individual threads of each process may submit work to the GPU simultaneously.

Using MPS effectively causes EXCLUSIVE_PROCESS mode to behave like DEFAULT mode for all MPS clients. MPS will always allow multiple clients to use the GPU via the MPS server.

When using MPS it is recommended to use EXCLUSIVE_PROCESS mode to ensure that only a single MPS server is using the GPU, which provides additional insurance that the MPS server is the single point of arbitration between all CUDA processes for that GPU.

GPU compute mode的设置方法:sudo nvidia-smi -i 0 -c EXCLUSIVE_PROCESS

Performance

Client-Server Connection Limits

The pre-Volta MPS Server supports up to 16 client CUDA contexts per-device concurrently. Volta MPS server supports 48 client CUDA contexts per-device. These contexts may be distributed over multiple processes. If the connection limit is exceeded, the CUDA application will fail to create a CUDA Context and return an API error from cuCtxCreate() or the first CUDA Runtime API call that triggers context creation. Failed connection attempts will be logged by the MPS server.

Volta MPS Execution Resource Provisioning

Volta MPS supports limited execution resource provisioning. The client contexts can be set to only use a portion of the available threads. The provisioning capability is commonly used to achieve two goals:

- Reduce client memory foot print: Since each MPS client process has fully isolated address space, each client context allocates independent contest storage and scheduling resources. Those resources scale with the amount of threads available to the client. By default, each MPS client has all available threads useable. As MPS is usually used with multiple processes running simultaneously, making all threads accessible to every client is often unnecessary, and therefore wasteful to allocate full context storage. Reducing the number of threads available will effectively reduce the context storage allocation size.

- Improve QoS: The provisioning mechanism can be used as a classic QoS mechanism to limit available compute bandwidth. Reducing the portion of available threads will also concentrate the work submitted by a client to a set of SMs, reducing destructive interference with other clients submitted work.

The limit will be internally rounded up to the next hardware-supported thread count limit. On Volta, the executed limit is reflected through device attribute cudaDevAttrMultiProcessorCount.

Setting the limit does not reserve dedicated resources for any MPS client context. It simply limits how much resources can be used by a client context. Kernels launched from different MPS client contexts may execute on the same SM, depending on load-balancing. Also, the limit is configured for a client process when it starts, and cannot be changed for the client process afterwards.

设置参数后只是限制了进程使用GPU线程的上限,如果低于上限,GPU会根据负载实时调整线程的位置(没有写错)

线程的移动会不会带来额外的开销?

A common provisioning strategy is to divide the available threads equally to each MPS client processes (i.e. 100% / n, for n expected MPS client processes). This strategy will allocate close to the minimum amount of execution resources, but it could restrict performance for clients that could occasionally make use of idle resources. A more optimal strategy is to divide the portion by half of the number of expected clients (i.e. 100% / 0.5n) to give the load balancer more freedom to overlap execution between clients when there are idle resources.

By default, each client is provisioned to have access to all available threads. This will allow the maximum degree of scheduling freedom, but at a cost of higher memory footprint due to wasted execution resource allocation. The memory usage of each client process can be queried through nvidia-smi.

The provisioning limit can be set via a few different mechanisms for different effects.

- The MPS control utility provides 2 sets of commands to set / query the limit of all future MPS clients. See section 4.1.1 for more details.

- The limit can be further constrained for new clients by setting the environment variable CUDA_MPS_ACTIVE_THREAD_PERCENTAGE for a client process. See section 4.2.5 for more details.

Threads & Linux Scheduling

On pre-Volta GPUs, launching more MPS clients than there are available logical cores on your machine will incur increased launch latency and will generally slow down client-server communication due to how the threads get scheduled by the Linux CFS (Completely Fair Scheduler). For setups where multiple GPUs are used with an MPS control daemon and server started per GPU, we recommend pinning each MPS server to a distinct core. This can be accomplished by using the utility ‘taskset’, which allows binding a running program to multiple cores or launching a new one on them. To accomplish this with MPS, launch the control daemon bound to a specific core, e.g. `taskset –c 0 nvidia-cuda-mps-control –d`. The process affinity will be inherited by the MPS server when it starts up.

Architecture

background

CUDA is a general purpose parallel computing platform and programming model that leverages the parallel compute engine in NVIDIA GPUs to solve many complex computational problems in a more efficient way than on a CPU.

A CUDA program starts by creating a CUDA context, either explicitly using the driver API or implicitly using the runtime API, for a specific GPU. The context encapsulates all the hardware resources necessary for the program to be able to manage memory and launch work on that GPU.

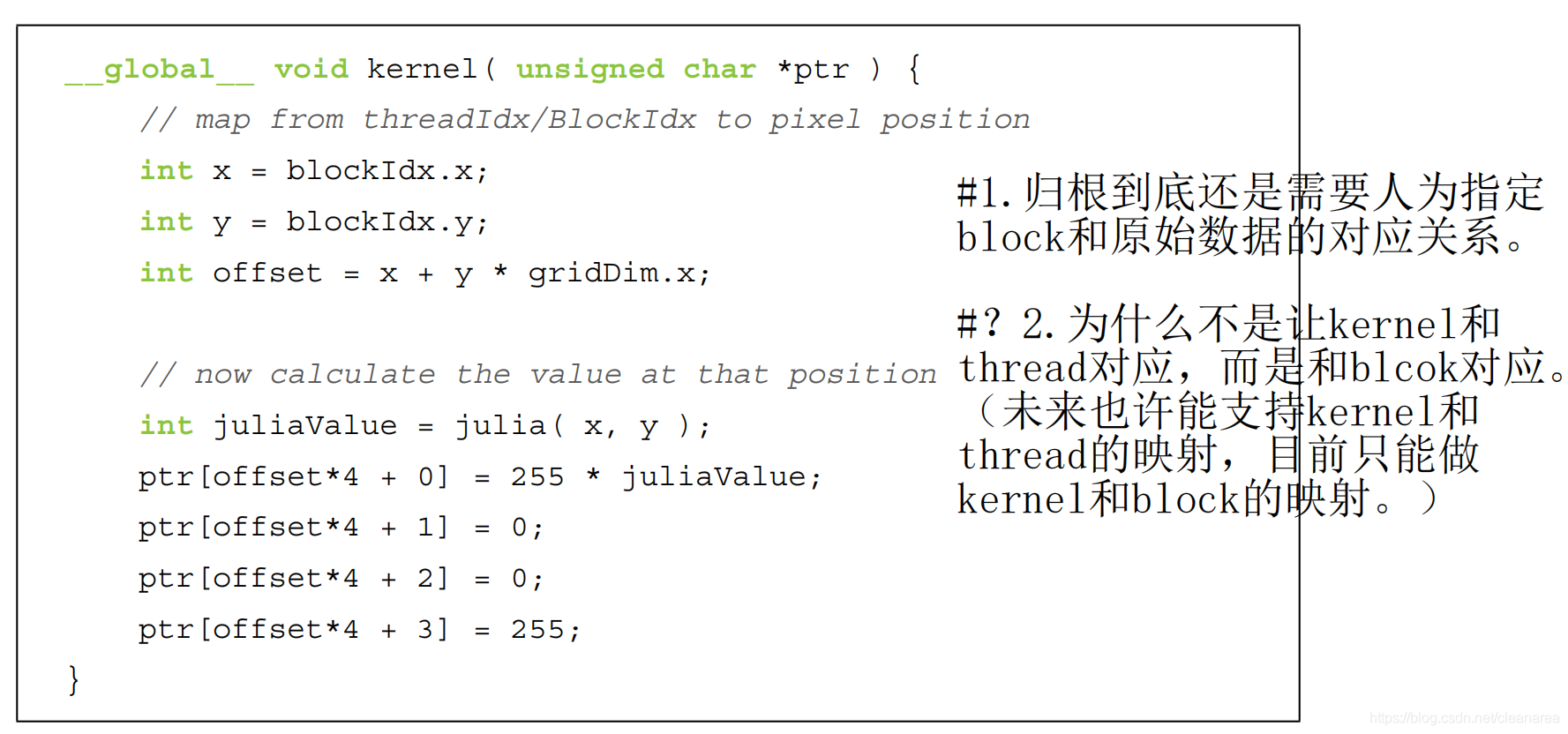

Launching work on the GPU typically involves copying data over to previously allocated regions in GPU memory, running a CUDA kernel that operates on that data, and then copying the results back from GPU memory into system memory. A CUDA kernel consists of a hierarchy of thread groups that execute in parallel on the GPUs compute engine.

All work on the GPU launched using CUDA is launched either explicitly into a CUDA stream, or implicitly using a default stream. A stream is a software abstraction that represents a sequence of commands, which may be a mix of kernels, copies, and other commands, that execute in order. Work launched in two different streams can execute simultaneously(可以并行操作), allowing for coarse grained parallelism.

CUDA streams are aliased onto one or more ‘work queues’ on the GPU by the driver. Work queues are hardware resources that represent an in-order sequence of the subset of commands in a stream to be executed by a specific engine on the GPU, such as the kernel executions or memory copies. GPU's with Hyper-Q have a concurrent scheduler to schedule work from work queues belonging to a single CUDA context. Work launched to the compute engine from work queues belonging to the same CUDA context can execute concurrently on the GPU.

The GPU also has a time sliced scheduler to schedule work from work queues belonging to different CUDA contexts. Work launched to the compute engine from work queues belonging to different CUDA contexts cannot execute concurrently. This can cause underutilization of the GPU’s compute resources if work launched from a single CUDA context is not sufficient to use up all resource available to it.

Additionally, within the software layer, to receive asynchronous notifications from the OS and perform asynchronous CPU work on behalf of the application the CUDA Driver may create internal threads: an upcall handler thread and potentially a user callback executor thread.

1. 每个cuda程序都会创建一个运行时环境(context),该环境包含了cuda程序运行所需的全部硬件资源(比如threads)

2. GPU上使用CUDA创建的任务(如kernels,copies等)都会封装成一个单的stream,stream内的任务顺序执行;不同stream里的任务可以并行执行(parallel)

3.

CUDA在GPU上的并行操作

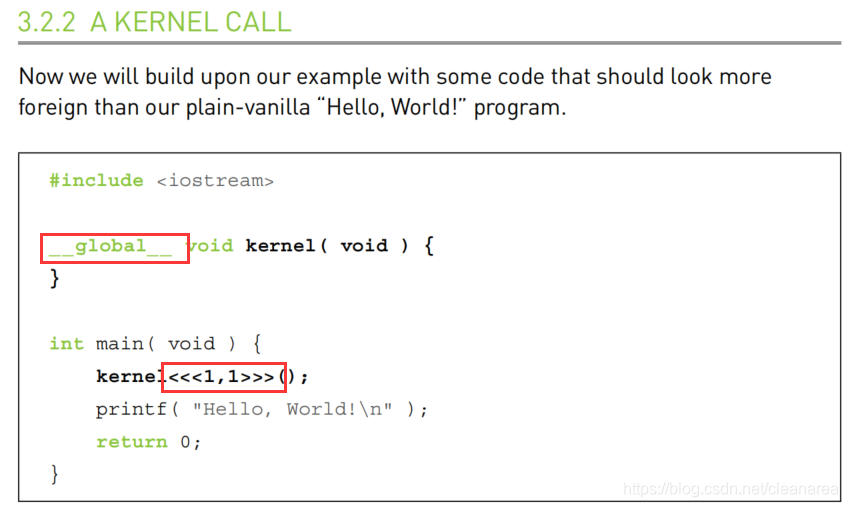

kernel的定义

所有在GPU上的运行的操作都可以定义为kernel,(or 只有特定操作比如memcopy才是kernel,kernel的调用有什么特点?)

shared memory and synchronization

We’re glad you asked. The CUDA C compiler treats variables in shared memory differently than typical variables. It creates a copy of the variable for each blockthat you launch on the GPU. Every thread in that block shares the memory, but threads cannot see or modify the copy of this variable that is seen within otherblocks.This provides an excellent means by which threads within a block can communicate and collaborate on computations. Furthermore, shared memorybuffers reside physically on the GPU as opposed to residing in off-chip DRAM. Because of this, the latency to access shared memory tends to be far lowerthan typical buffers, making shared memory effective as a per-block, software managed cache or scratchpad.shared memory只能被同一个block中的thread共享,且需要做好线程间的同步操作。

MPS和Hyper-Q的区别

MPS allows overlapping of kernel and memcopy operations from different processes on the GPU to achieve maximum utilization.

Hardware Changes - Hyper-Q which allows CUDA kernels to be processed concurrently on the same GPU。

问题总结

问题1

(pytorch) wujing@Turing:~/Projects/pytorch_mps$ nvprof python run_model.py

Using cache found in /home/wujing/.cache/torch/hub/pytorch_vision_v0.6.0

==10887== NVPROF is profiling process 10887, command: python run_model.py

Traceback (most recent call last):

File "/home/wujing/Projects/pytorch_mps/run_model.py", line 30, in <module>

output = model(input_batch)

File "/home/wujing/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/module.py", line 727, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/wujing/.cache/torch/hub/pytorch_vision_v0.6.0/torchvision/models/inception.py", line 190, in forward

x, aux = self._forward(x)

File "/home/wujing/.cache/torch/hub/pytorch_vision_v0.6.0/torchvision/models/inception.py", line 127, in _forward

x = self.Conv2d_1a_3x3(x)

File "/home/wujing/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/module.py", line 727, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/wujing/.cache/torch/hub/pytorch_vision_v0.6.0/torchvision/models/inception.py", line 430, in forward

x = self.conv(x)

File "/home/wujing/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/module.py", line 727, in _call_impl

result = self.forward(*input, **kwargs)

File "/home/wujing/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/conv.py", line 423, in forward

return self._conv_forward(input, self.weight)

File "/home/wujing/anaconda3/envs/pytorch/lib/python3.9/site-packages/torch/nn/modules/conv.py", line 419, in _conv_forward

return F.conv2d(input, weight, self.bias, self.stride,

RuntimeError: cuDNN error: CUDNN_STATUS_NOT_INITIALIZED

==10887== Warning: The user does not have permission to profile on the target device. See the following link for instructions to enable permissions and get more information: https://developer.nvidia.com/NVSOLN1000

==10887== Profiling application: python run_model.py

==10887== Profiling result:

No kernels were profiled.

No API activities were profiled.

==10887== Warning: Some profiling data are not recorded. Make sure cudaProfilerStop() or cuProfilerStop() is called before application exit to flush profile data.

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)